Introduction

Data moves faster than ever before. If you work in software or data, you have likely noticed that managing data pipelines can be messy, slow, and prone to breaking. That is exactly where DataOps comes in. It brings the speed, reliability, and automation of DevOps to the world of data analytics. If you are looking to prove your skills in this rapidly growing field, the DataOps Certified Professional (DOCP) is the industry standard to aim for. I have seen many engineers struggle to bridge the gap between “just moving data” and building robust, automated data platforms that businesses can actually trust. This guide will walk you through everything you need to know about the DOCP certification—without the confusing jargon—so you can decide if it is the right career move for you.

Quick View: DataOps Certified Professional (DOCP)

Here is a snapshot of the certification details you need before we dive deep.

| Feature | Details |

|---|---|

| Certification Name | DataOps Certified Professional (DOCP) |

| Track | DataOps & Data Engineering |

| Level | Intermediate to Advanced |

| Who it is for | Data Engineers, DevOps Engineers, ETL Developers, Architects |

| Prerequisites | Basic understanding of Linux, Database concepts, and SDLC |

| Skills Covered | Data Pipelines, Apache NiFi, Kafka, StreamSets, Automation, Governance |

| Recommended Order | Linux Basics → DevOps Basics → DOCP |

What is the DataOps Certified Professional (DOCP)?

This certification is designed to validate your ability to build, manage, and automate data pipelines. It is not just about writing SQL queries or moving files; it is about treating data workflows like a production software product.

The course covers the entire lifecycle—from data ingestion to processing and delivery—using modern tools that major tech companies rely on today. You will learn how to reduce “cycle time” (the time it takes to get data from source to user) and improve data quality through automated testing and monitoring. It effectively bridges the gap between data science teams and operations teams, solving the “it worked on my laptop” problem for data models.

Who Should Take It?

- Data Engineers: If you are tired of manually fixing broken ETL scripts every morning, this course teaches you how to automate those fixes.

- DevOps Engineers: You are increasingly asked to manage Kafka clusters or Airflow DAGs. This gives you the domain knowledge to support data teams effectively.

- Software Engineers: If you are moving into a backend role that handles massive data streams, you need to understand the difference between transactional app data and analytical data pipelines.

- Team Leads/Managers: You need to understand the architecture to hire the right people and lead modern data teams effectively.

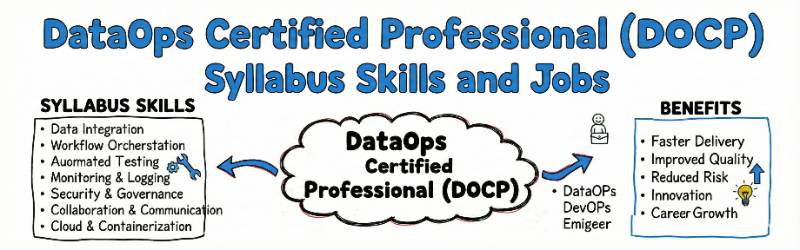

Skills You Will Gain

- Automated Data Pipelines: You will learn to build workflows that are “self-healing.” This means if a server blips, the pipeline pauses and retries instead of crashing and waking you up at 3 AM.

- Tool Mastery: You will get deep, hands-on experience with industry-standard tools. This isn’t just theory; you will configure Apache NiFi, StreamSets, Confluent Kafka, Talend, and Apache Camel yourself.

- Data Governance: You will understand how to automatically track where data comes from (lineage). If a report is wrong, you can trace it back to the exact source file in seconds.

- Orchestration: You will learn to schedule and monitor complex data flows so that dependencies are managed correctly (e.g., “don’t run the daily report until the nightly data load is actually finished”).

- Production Deployment: You will learn how to move data models and pipelines from a development environment to a production environment safely, using CI/CD principles tailored for data.

Real-World Projects You Will Be Able To Do

After finishing this certification, you won’t just know the theory. You will be confident enough to build:

- Real-Time Streaming Pipeline: You will be able to ingest live data (like IoT sensor readings or server logs) using Kafka, process it in real-time to find anomalies (like a temperature spike), and store it for analysis.

- Automated ETL Workflows: You will replace fragile, manual cron jobs with robust pipelines using Apache NiFi or StreamSets. These pipelines will handle error retries and send you alerts on Slack if something goes wrong.

- Microservices for Data: You will create Java or Python-based microservices that handle specific data processing tasks. This makes your architecture modular—if one part breaks, the rest keeps running.

- Data Health Dashboards: You will set up monitoring dashboards that act like a “check engine light” for your data, alerting you when data quality drops before the business executives notice.

Preparation Plan: How to Pass

Depending on your current schedule and experience level, here is how you should tackle the learning path to ensure success.

The “Sprint” Plan (14 Days)

This is intense and assumes you already know Linux and some data basics.

- Days 1-3: Focus entirely on Linux command line and basic Data concepts. Ensure your local environment (Docker/VM) is ready so you don’t waste time fixing installation issues later.

- Days 4-7: Deep dive into Apache NiFi and Kafka. Set up a local cluster. Try moving a file from a local folder to a Kafka topic.

- Days 8-11: Work on StreamSets and Talend. Build one dummy pipeline that reads a CSV file, changes a column, and writes it to a database.

- Days 12-14: Review the Interview Kit (which has 50 sets of questions) and take practice exams. Focus on “scenario” questions.

The “Professional” Plan (30 Days – Recommended)

This is the balanced approach for working professionals.

- Week 1: Master the core DataOps principles. Set up your lab environment using Vagrant or Docker. Get comfortable with the user interfaces of the tools.

- Week 2: Ingestion tools focus. Spend 2-3 hours a night practicing with NiFi and StreamSets. Ingest data from different sources (CSV, JSON, SQL database).

- Week 3: Streaming & Processing. Focus on Kafka Connect and Apache Beam. Learn how to handle “backpressure” (when data comes in faster than you can process it).

- Week 4: Final Project implementation. Build the “Real-Time Scenario” project included in the course. Review the interview questions and do a mock interview if possible.

The “Busy Manager” Plan (60 Days)

For those with limited time who need to learn the concepts deeply.

- Weeks 1-3: Watch the self-learning videos during commutes or lunch breaks. Focus on understanding the architecture and why we use these tools, rather than just the clicks.

- Weeks 4-6: Dedicate weekends to hands-on practice. Focus on one tool at a time (e.g., “This weekend is Kafka weekend”).

- Weeks 7-8: Project work and review. Assemble all the pieces into a final project. Review the theoretical concepts to ensure you can explain them to stakeholders.

Common Mistakes to Avoid

- Ignoring the Labs: You cannot pass this exam or survive a real job by just watching videos. You must install the tools and break things to learn how to fix them.

- Skipping Linux Basics: Most DataOps tools (Kafka, NiFi) run natively on Linux. If you struggle with the command line (cd, ls, grep, permissions), you will struggle to configure these tools.

- Focusing Only on One Tool: DataOps is about the flow between tools (e.g., NiFi to Kafka to DB). Don’t just learn them in isolation; learn how they talk to each other.

- Underestimating Configuration: A lot of the work is in the settings (memory limits, buffer sizes, timeout settings). Pay attention to these details during your practice, as they cause most production issues.

Best Next Certification

Once you have the DOCP, where do you go?

- Same Track: Certified Kafka Administrator (to deepen your specific skills in the most popular streaming platform).

- Cross-Track: Certified Kubernetes Administrator (CKA) (to learn how to host and scale these data tools on modern infrastructure).

- Leadership: Certified DevOps Manager (to move up into a role where you lead the teams building these platforms).

Choose Your Path

| Path | Focus Area | Key Certifications |

|---|---|---|

| DevOps | Infrastructure & CI/CD | Certified DevOps Architect, Docker/Kubernetes Certs |

| DevSecOps | Security in Pipelines | Certified DevSecOps Professional (CDSP) |

| SRE | Reliability & Uptime | Site Reliability Engineering (SRE) Foundation/Practitioner |

| AIOps / MLOps | AI Model Operations | MLOps Certified Professional |

| DataOps | Data Pipelines & Flow | DataOps Certified Professional (DOCP) |

| FinOps | Cloud Cost Optimization | Certified FinOps Practitioner |

Role → Recommended Certifications Map

Find your current or desired job title below to see exactly what you should study to advance your career.

| Role | Recommended Certification | Why? |

|---|---|---|

| DevOps Engineer | DevOps Architect + DataOps Certified Professional | Adds huge value by letting you support data teams, not just software teams. You become a “double threat.” |

| SRE | SRE Practitioner + Python Scripting | Helps you automate reliability for data infrastructure, which is often more fragile than app infrastructure. |

| Platform Engineer | Kubernetes Administrator (CKA) + DataOps Certified Professional | You build the platform that the data tools run on; this helps you understand your users (the data scientists). |

| Cloud Engineer | AWS/Azure Solutions Architect + Terraform | Focuses on the underlying infrastructure (storage, compute) for data lakes. |

| Security Engineer | DevSecOps Professional + Certified Ethical Hacker | Essential for securing sensitive data pipelines (PII, financial data) which are prime targets for attacks. |

| Data Engineer | DataOps Certified Professional + Spark/Hadoop Certs | This is your core skill set: automating the work you do every day to make it scalable. |

| FinOps Practitioner | FinOps Practitioner + AWS Cost Management | Helps you understand the massive costs associated with data storage and processing (which can be huge). |

| Engineering Manager | DevOps Manager + Scrum Master | Gives you the vocabulary and understanding to manage high-performing data teams and realistic timelines. |

Top Institutions for DataOps Training

If you are looking for training that includes the certification, these institutions are highly recommended for their practical approach.

1. DevOpsSchool

This is widely considered the gold standard for this specific certification. They offer a massive repository of learning materials, including over 50 sets of interview questions and real-world projects. Their biggest selling point is the lifetime technical support, meaning their consultants will help you even after you have finished the course and are working on a job.

2. Cotocus

Cotocus specializes in corporate and group training, making them an excellent choice if you are a manager looking to upskill your entire team at once. They focus heavily on the consulting side of things, so their training often includes “war stories” and real scenarios from their client projects. Their instructors are usually working consultants who teach what they practice.

3. Scmgalaxy

If you are a tool-centric learner who loves to dig deep into configurations, Scmgalaxy is the right place. They have a strong community focus and provide excellent resources on Source Code Management (SCM) and pipeline orchestration. Their DataOps training is very detailed regarding the “Ops” side—how to version control your data models and manage infrastructure as code.

4. BestDevOps

BestDevOps is known for its intensive, bootcamp-style training sessions designed to get you ready fast. They focus on the “best practices” and industry standards, cutting out the fluff to focus on what you actually need to pass the exam and do the job. It is a great option for professionals who want a no-nonsense, direct approach to learning.

5. DevSecOpsSchool

Security is a huge part of data management, and DevSecOpsSchool approaches the DOCP certification with a “security-first” mindset. While teaching you the standard DataOps curriculum, they place extra emphasis on how to secure your pipelines and protect sensitive data (PII). This is the perfect choice if you work in banking, healthcare, or any regulated industry.

6. SRESchool

Site Reliability Engineering (SRE) and DataOps overlap significantly when it comes to uptime and monitoring. SRESchool’s training focuses on how to build data pipelines that never go down. They teach the DOCP curriculum with a strong lens on observability, monitoring, and error budgeting, making it ideal for engineers who need to build mission-critical data platforms.

7. AIOpsSchool

As we move toward AI-driven operations, AIOpsSchool integrates the concepts of Artificial Intelligence into their DataOps training. They are great for forward-looking engineers who want to understand how DataOps feeds into Machine Learning models. Their take on the certification prepares you not just for today’s data needs, but for the AI-heavy future.

8. DataOpsSchool

As the name suggests, this institution is purely dedicated to the DataOps niche. Unlike generalist schools, every instructor here is a DataOps specialist. They offer the most focused and deep-dive curriculum into tools like Apache NiFi and StreamSets, making them the best choice if you want to become a subject matter expert in this specific domain.

9. FinOpsSchool

Data processing can be incredibly expensive in the cloud, and FinOpsSchool brings that financial awareness to their DataOps training. While covering the technical certification, they also teach you how to build cost-efficient pipelines. This is a unique angle that is highly valued by employers who want to keep their AWS or Azure bills under control.

FAQs: DataOps Certified Professional (DOCP)

1. Is coding required for this certification?

You don’t need to be a hardcore software developer, but knowing basic Python or Java helps significantly. Many tools like NiFi allow for custom scripting, and the microservices project will require some coding logic.

2. How long does the training take?

The course is approximately 60 hours. Most working professionals finish it in 4-6 weeks by attending weekend classes and practicing during the week.

3. What are the prerequisites?

You should have a PC with at least 8GB RAM (ideally 16GB) because running tools like Kafka and NiFi simultaneously is resource-heavy. You also need a basic understanding of SDLC and database concepts.

4. Does this cover Cloud DataOps?

Yes. While the labs might run on local VMs or Docker for cost reasons, the concepts and tools (Kafka, NiFi, Talend) are the exact same ones used in AWS, Azure, and Google Cloud environments.

5. How hard is the exam?

It is practical-focused. If you have done the labs and the final project, you will pass. If you only memorized theory from slides, you will likely struggle with the scenario-based questions.

6. Will this help me get a job?

Absolutely. Companies are drowning in data and are desperate for people who can automate the cleaning, movement, and management of that data. It is a high-demand niche with a shortage of skilled people.

7. Can I take this online?

Yes, both the training and the certification exam are fully online. You can learn and get certified from anywhere in the world.

8. What is the value of the “Lifetime Support” mentioned?

Institutes like DevOpsSchool offer lifetime technical support. This is huge—it means if you get stuck on a DataOps problem in your actual job 6 months later, you can reach out to them for guidance.

9. Is the certification recognized globally?

Yes, the skills are based on open-source, globally standard tools (Apache foundation projects). The certification proves you know these universal tools, which are used by companies worldwide.

10. Do I get access to the materials after the course?

Yes, you get lifetime access to the LMS, which includes recordings, presentations, and step-by-step guides. You can review them whenever you need a refresher.

11. What if I miss a class?

Class recordings are uploaded to the LMS, so you can catch up. You can also re-attend the missed session in a future batch within 3 months.

12. Can I get a refund if I don’t like it?

Generally, once training is confirmed, there is no refund. However, you can request demo videos beforehand to check if the teaching style suits you.

FAQs: DataOps Certified Professional (DOCP)

1. Is coding required for this certification?

You do not need to be a hardcore software developer to pass this certification. However, having a basic understanding of scripting (like Python or Bash) is very helpful. Many DataOps tools like Apache NiFi allow you to use low-code drag-and-drop interfaces, but knowing how to read and tweak a little bit of code will make you much more effective when building complex pipelines.

2. How long does the training and preparation take?

The training course is typically around 60 hours of live sessions. For a working professional dedicating weekends to classes and a few hours during the week for practice, the entire process—from starting the course to passing the exam—usually takes about 4 to 6 weeks. It is designed to fit into a busy schedule.

3. What are the hardware prerequisites for the labs?

Since this is a hands-on course where you will be running powerful tools like Kafka, NiFi, and StreamSets, you need a decent computer. I strongly recommend a laptop or PC with at least 16GB of RAM. If you have 8GB, you might struggle to run multiple services at the same time without your system slowing down. You will also need admin rights to install Docker or Virtual Machines.

4. Does this certification cover Cloud DataOps (AWS/Azure)?

Yes, absolutely. While the labs often use local Virtual Machines or Docker containers to save you money on cloud bills, the concepts are universal. The tools you learn (like Kafka and NiFi) work exactly the same way whether they are running on your laptop, on an AWS EC2 instance, or in an Azure Kubernetes Service cluster. The skills are 100% transferable to the cloud.

5. How hard is the exam?

The exam is practical and scenario-based, not just multiple-choice memory questions. If you have completed the labs and the final project yourself, you will find it straightforward. However, if you only watched the videos without doing the hands-on work, you will likely struggle. It tests your ability to do the job, not just talk about it.

6. Will this certification help me get a job?

Yes. Companies are currently drowning in data and are desperate for people who can automate the movement and cleaning of that data. There is a shortage of skilled professionals who understand both Data (SQL, ETL) and Ops (Automation, CI/CD). This certification proves you can bridge that gap, making you a very attractive candidate for high-paying roles.

7. Is the certification recognized globally?

Yes. The certification focuses on open-source, industry-standard tools (like Apache NiFi, Kafka, and ELK Stack) that are used by Fortune 500 companies around the world. It is not tied to a single proprietary vendor tool that might disappear. Because it validates skills on these universally used technologies, it is recognized by employers globally.

8. What happens if I get stuck during my work after the course is over?

One of the biggest benefits of taking this through DevOpsSchool is their lifetime technical support. This means if you get a job six months later and run into a tough DataOps problem in your real project, you can actually reach out to their community and trainers for guidance. You are not just buying a course; you are joining a support network for your career.

Testimonials

“The training was very useful and interactive. The trainer helped develop confidence in all of us. I really liked the hands-on examples covered during the program.”

— Indrayani, India

“Very well organized training. It helped a lot to understand the DataOps concept and details related to various tools. Very helpful.”

— Sumit Kulkarni, Software Engineer

“Good training session about basic concepts. Working sessions were also good. Appreciate the knowledge displayed in the training.”

— Vinayakumar, Project Manager, Bangalore

Conclusion

The DataOps Certified Professional (DOCP) is more than just a certificate; it is a clear signal to the market that you understand the future of data engineering. In a world where data is called the “new oil,” you are the person who builds the refineries and pipelines that make that oil useful. I have seen too many teams stuck in a cycle of fixing fragile, manual scripts every morning, wasting time on problems that automation could solve. This certification gives you the blueprint to build self-healing, automated systems that run smoothly while you sleep. If you are an engineer looking to future-proof your career, or a manager trying to fix a slow data team, this training bridges the gap between “making it work” and “making it scalable.” The tools you will master—Kafka, NiFi, StreamSets—are not going away; they are becoming the backbone of modern enterprise architecture.